3D Semantic Instance Maps: Leveraging Foundation Models for Language-Guided Navigation

Table of Links

Abstract and 1 Introduction

-

Related Works

2.1. Vision-and-Language Navigation

2.2. Semantic Scene Understanding and Instance Segmentation

2.3. 3D Scene Reconstruction

-

Methodology

3.1. Data Collection

3.2. Open-set Semantic Information from Images

3.3. Creating the Open-set 3D Representation

3.4. Language-Guided Navigation

-

Experiments

4.1. Quantitative Evaluation

4.2. Qualitative Results

-

Conclusion and Future Work, Disclosure statement, and References

ABSTRACT

Humans excel at forming mental maps of their surroundings, equipping them to understand object relationships and navigate based on language queries. Our previous work SI Maps [1] showed that having instance-level information and the semantic understanding of an environment helps significantly improve performance for language-guided tasks. We extend this instance-level approach to 3D while increasing the pipeline’s robustness and improving quantitative and qualitative results. Our method leverages foundational models for object recognition, image segmentation, and feature extraction. We propose a representation that results in a 3D point cloud map with instance-level embeddings, which bring in the semantic understanding that natural language commands can query. Quantitatively, the work improves upon the success rate of language-guided tasks. At the same time, we qualitatively observe the ability to identify instances more clearly and leverage the foundational models and language and image-aligned embeddings to identify objects that, otherwise, a closed-set approach wouldn’t be able to identify.

1. Introduction

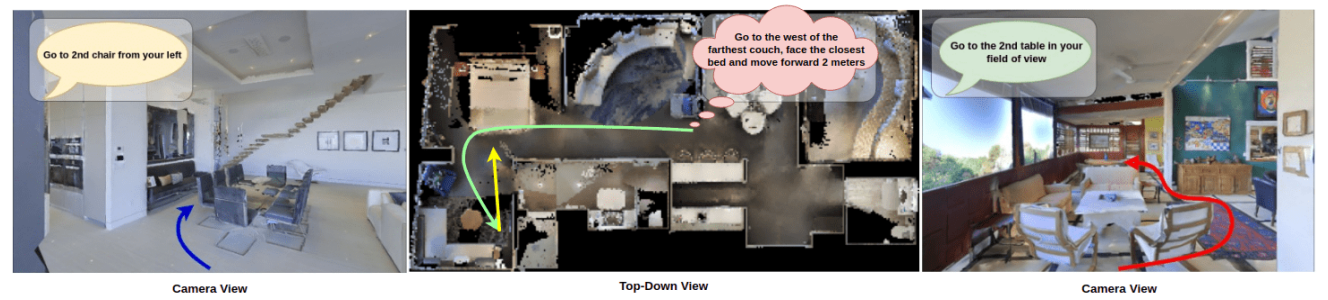

Vision-Language Navigation(VLN) research has recently seen significant progress, enabling robots to navigate environments using natural language instructions [2, 3]. A major challenge in this field is grounding language descriptions onto real-world visual observations, especially for tasks requiring identifying specific object instances and spatial reasoning [4]. There is an increasing interest in building semantic spatial maps representing the environment and its objects [5]. Works like VLMaps [2] and NLMap [6] leverage pre-trained vision-language models to construct semantic spatial maps (SSMs) without manual labelling. However, these methods cannot differentiate between multiple instances of the same object, limiting their utility for instance-specific queries.

\ Our previous work [1] proposes a memory-efficient mechanism for creating a 2D semantic spatial representation of the environment with instance-level semantics directly applicable to robots navigating in real-world scenes. It was shown that Semantic Instance Maps (SI Maps) are computationally efficient to construct and allow for a broad range of complex and realistic commands that elude prior works. However, the map built in this previous work is a top-down view 2D map based on a closed set and assumes that the Large Language Model (LLM) knows all object categories in advance to map language queries to a specific object class. The 2D maps, though sufficient for a good range of tasks, limit performance when larger ones can obscure smaller objects.

\ Building upon [1], we introduce a novel approach for Semantic Instance Maps. Our work introduces Open-set 3D Semantic Instance Maps (O3D-SIM ), addressing the limitation of traditional closed-set methods [7, 8], which assume only predefined set objects will be encountered during operation. In contrast, O3D-SIM leverages methods to identify and potentially categorize unseen objects not explicitly included in the training data. This is crucial for real-world scenarios with diverse and unseen objects. This enables better performance and complex query handling for unseen object categories. We address the previously mentioned limitations by enabling instance-level object identification within the spatial representation and operating in an open-set manner, excelling in real-world scenarios. We have achieved major improvements over our previous work, which operated in a closed-set manner. The current pipeline leverages state-of-the-art foundational models like CLIP [9] and DINO [10]. These models extract semantic features from images, allowing them to recognize objects and understand the finer details and relationships between them. For example, the DINOv2 [10] model, trained on various chair images, can identify a chair in a new image and distinguish between a dining chair and an office chair.

\ This paper details the proposed pipeline for creating the 3D map and evaluates its effectiveness in VLN tasks for both simulation and real-world data. Experimental evaluations demonstrate the improvements achieved through our open-set approach, with an increase in correctly identified object instances, bringing our results closer to ground truth values even in challenging real-world data. Additionally, our pipeline achieves a higher success rate for complex language navigation queries targeting specific object instances, including those unseen during training, such as mannequins or bottles.

\ The contributions of our work can be summarized as follows:

\ (1) Extending the closed-set 2D instance-level approach from our previous work [1] to an open-set 3D Semantic Instance Map using state-of-the-art image segmentation, open-set image-language-aligned embeddings, and hierarchical clustering in 3D. The resulting map consists of a 3D point cloud with instance-level embeddings, enhancing the semantic understanding.

\ (2) Validating the effectiveness of the 3D map approach to VLN tasks through both qualitative and quantitative experiments.

\ The remainder of this paper is organized as follows. Section 2 reviews and analyzes recent literature, providing the necessary background on semantic scene understanding, 3D scene reconstruction, and VLN to the reader. Section 3 outlines the methodology behind our proposed 3D map. Section 4 discusses the experimental evaluation of our proposed 3D map’s effectiveness. Finally, concluding remarks and directions for future work are presented in Section 5.

\

\

:::info This paper is available on arxiv under CC by-SA 4.0 Deed (Attribution-Sharealike 4.0 International) license.

:::

\

:::info Authors:

(1) Laksh Nanwani, International Institute of Information Technology, Hyderabad, India; this author contributed equally to this work;

(2) Kumaraditya Gupta, International Institute of Information Technology, Hyderabad, India;

(3) Aditya Mathur, International Institute of Information Technology, Hyderabad, India; this author contributed equally to this work.

(4) Swayam Agrawal, International Institute of Information Technology, Hyderabad, India;

(5) A.H. Abdul Hafez, Hasan Kalyoncu University, Sahinbey, Gaziantep, Turkey;

(6) K. Madhava Krishna, International Institute of Information Technology, Hyderabad, India.

:::

\

You May Also Like

XRP Prints 707,000,000 in 24 Hours: Is This Enough?

XRP Price Prediction: Target $2.29 Resistance Break Within 7 Days for Move to $2.70