Building Open-Set 3D Representation: Feature Fusion and Geometric-Semantic Merging

Table of Links

Abstract and 1 Introduction

-

Related Works

2.1. Vision-and-Language Navigation

2.2. Semantic Scene Understanding and Instance Segmentation

2.3. 3D Scene Reconstruction

-

Methodology

3.1. Data Collection

3.2. Open-set Semantic Information from Images

3.3. Creating the Open-set 3D Representation

3.4. Language-Guided Navigation

-

Experiments

4.1. Quantitative Evaluation

4.2. Qualitative Results

-

Conclusion and Future Work, Disclosure statement, and References

3.3. Creating the Open-set 3D Representation

To complete building the O3D-SIM, we now build upon the feature embeddings extracted for each object by projecting object information to 3D space, clustering, and associating objects across multiple images to create a comprehensive 3D scene representation. The process of projecting the semantic information into the 3D space and refining the map is depicted in Figure 3.

\ 3.3.1. The O3D-SIM Intialization

\ The 3D map is initially created using a selected image, which acts as the reference frame for initialising our scene representation. This step establishes the foundational structure of our 3D scene, which is then progressively augmented with data from subsequent images to enrich the scene’s complexity and detail.

\ The data for objects within a 3D scene are organized as nodes within a dictionary, which initially starts as empty. Objects are then identified from the initial image along with the related data that encompasses embedding features and information on their masks. For each object discerned in the image, a 3D point cloud is created using the available depth information and the object’s mask. This point cloud formation involves mapping the 2D pixels into 3D space, facilitated by the camera’s intrinsic parameters and depth values. Subsequently, the camera pose is utilized to align the point cloud accurately within the global coordinate system. To refine our scene representation, background filtering removes elements identified as background, such as walls or floors. These elements are excluded from further processing, particularly in the clustering stage, as they do not constitute the main focus of our scene representation.

\ The set of object’s point clouds is processed further using DBSCAN[34] clustering for representation refinement. The point cloud is downsampled via voxel grid filtering to reduce the number of points and the computational complexity while preserving the data spatial structure manageable. DBSCAN groups points that are closely packed together while labelling points that lie alone in low-density regions as noise. In a postclustering step, the largest cluster typically corresponds to the main object of interest within the point cloud is identified. This helps filter out the noise and irrelevant points, producing a cleaner representation of the object of interest.

\ The pose of an object in 3D space is determined by calculating the orientation of a bounding box, which offers a concise spatial representation of the object’s location and size in 3D space. Subsequently, the 3D map output is initialized with an initial set of nodes, encapsulating feature embeddings, point cloud data, bounding boxes, and the count of points in the point cloud associated with each node. Each node also includes source information to facilitate tracing data origins and the linkage between nodes and their 2D image counterparts.

\ 3.3.2. Incremental Update of the O3D-SIM

\ After initializing the scene, we update the representation with data from new images. This process ensures our 3D scene stays current and precise as additional information becomes available. It iterates over each image in the image sequence; for each new image, multi-object data is extracted, and the scene is updated.

\ Objects are detected for each new image, and new nodes are created like the initial image. These temporary nodes contain the 3D data for newly detected objects that must either be merged into the existing scene or added as new nodes. The similarity between newly detected and existing scene nodes is determined by combining visual similarity, derived from feature embeddings, and spatial (geometric) similarity, obtained from point cloud overlap, to formulate an aggregate similarity measure. If this measure surpasses a predetermined threshold, the new detection is deemed to correspond to an existing object in the scene. Indeed, the newly detected node is either merged with an existing scene node or added as a new node.

\ Merging involves the integration of point clouds and the averaging of feature embeddings. A weighted average of CLIP and DINO embeddings is calculated, considering the contribution from the source key information, with a preference for nodes with more source identifiers. If a new node needs to be added, it is incorporated into the scene dictionary.

\ Scene refinement occurs once objects from all images in the input sequence have been added. This process consolidates nodes that represent the same physical objects but were initially identified as separate due to occlusions, viewpoint changes, or similar factors. It employs an overlap matrix to identify nodes that share spatial occupancy and logically merges them into a single node. The scene is finalized by discarding nodes that fail to meet the minimum number of points or detection criteria. This results in a refined and optimized final scene representation - OpenSet 3D Semantic Instance Maps, a.k.a., O3D-SIM.

\

:::info Authors:

(1) Laksh Nanwani, International Institute of Information Technology, Hyderabad, India; this author contributed equally to this work;

(2) Kumaraditya Gupta, International Institute of Information Technology, Hyderabad, India;

(3) Aditya Mathur, International Institute of Information Technology, Hyderabad, India; this author contributed equally to this work;

(4) Swayam Agrawal, International Institute of Information Technology, Hyderabad, India;

(5) A.H. Abdul Hafez, Hasan Kalyoncu University, Sahinbey, Gaziantep, Turkey;

(6) K. Madhava Krishna, International Institute of Information Technology, Hyderabad, India.

:::

:::info This paper is available on arxiv under CC by-SA 4.0 Deed (Attribution-Sharealike 4.0 International) license.

:::

\

Ayrıca Şunları da Beğenebilirsiniz

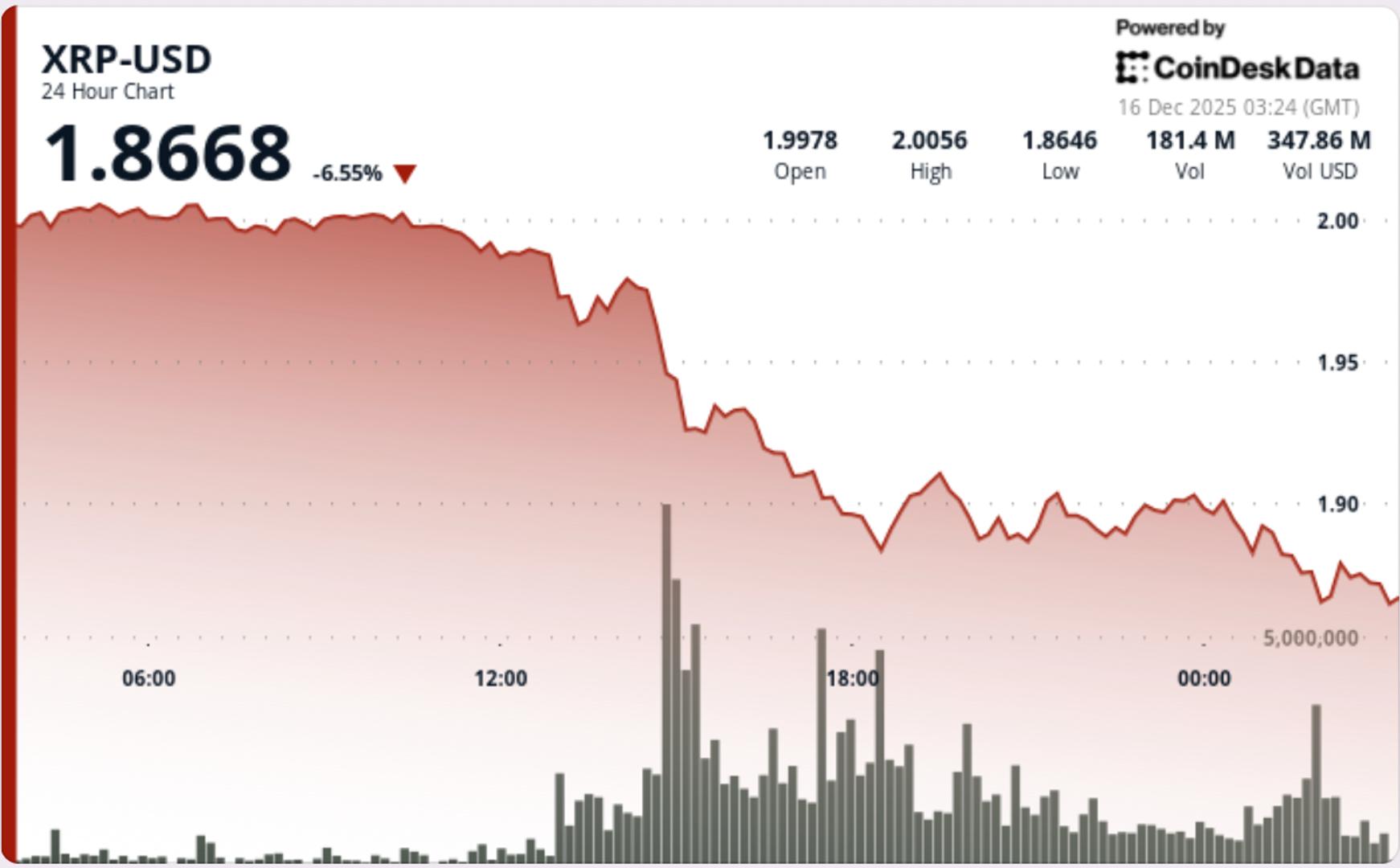

XRP price weakens at critical level, raising risk of deeper pullback

Copy linkX (Twitter)LinkedInFacebookEmail

Warsaw Stock Exchange Launches Poland's First Bitcoin ETF